by: Authors: Mustafa (Moose) Abdool, Soumyadip Banerjee, Karen Ouyang, Do-Kyum Kim, Moutupsi Paul, Xiaowei Liu, Bin Xu, Tracy Yu, Hui Gao, Yangbo Zhu, Huiji Gao, Liwei He, Sanjeev Katariya

Introduction

Search plays a crucial role in helping Airbnb guests find the perfect stay. The goal of Airbnb Search is to surface the most relevant listings for each user’s query — but with millions of available homes, that’s no easy task. It’s especially difficult when searches include large geographic areas (like California or France) or high-demand destinations (like Paris or London). Recent innovations — such as flexible date search, which allows guests to explore stays without fixed check-in and check-out dates — have added yet another layer of complexity to ranking and finding the right results.

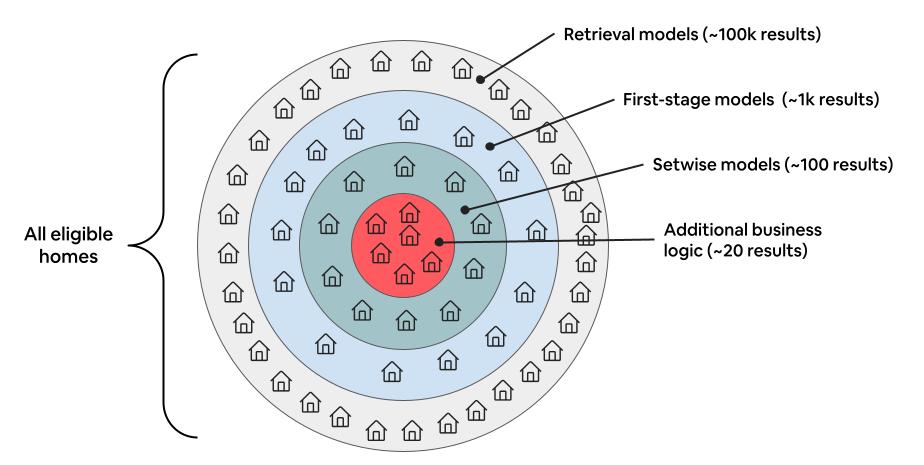

To tackle these challenges, we need a system that can retrieve relevant homes while also being scalable enough (in terms of latency and compute) to handle queries with a large candidate count. In this blog post, we share our journey in building Airbnb’s first-ever Embedding-Based Retrieval (EBR) search system. The goal of this system is to narrow down the initial set of eligible homes into a smaller pool, which can then be scored by more compute-intensive machine learning models later in the search ranking process.

Figure 1: The general stages and scale for the various types of ranking models used in Airbnb Search

We’ll explore three key challenges in building this EBR system: (1) constructing training data, (2) designing the model architecture, and (3) developing an online serving strategy using Approximate Nearest Neighbor (ANN) solutions.

Training Data Construction

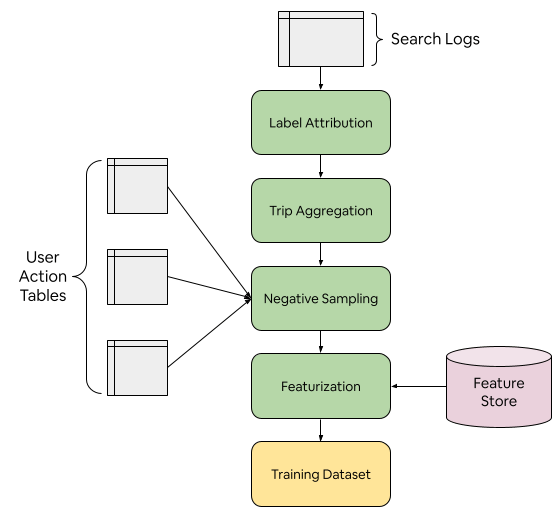

The first step in building our EBR system was training a machine learning model to map both homes and de-identified search queries into numerical vectors. To achieve this, we built a training data pipeline (Figure 3) that leveraged contrastive learning — a strategy that involves identifying pairs of positive- and negative-labeled homes for a given query. During training, the model learns to map a query, a positive home, and a negative home into a numerical vector, such that the similarity between the query and the positive home is much higher than the similarity between the query and the negative home.

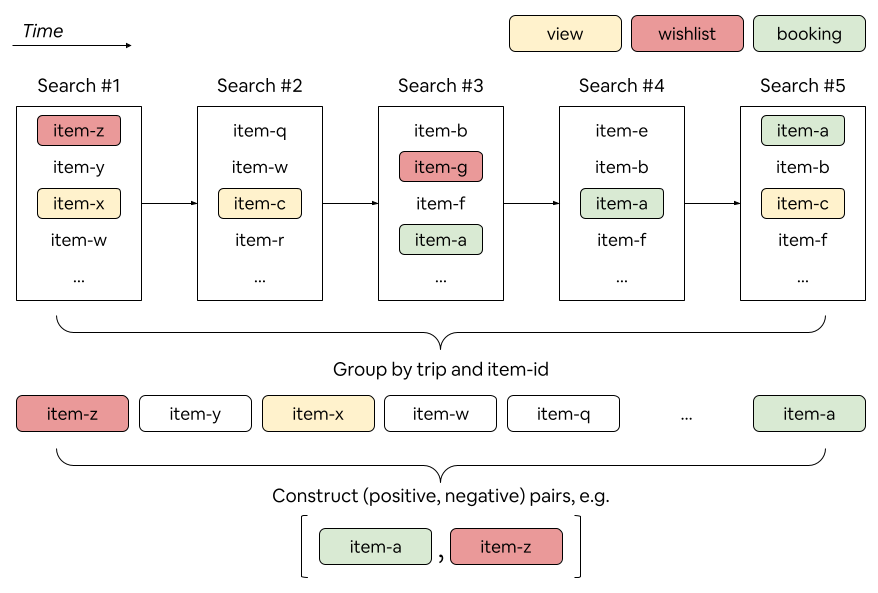

To construct these pairs, we devised a sampling method based on user trips. This was an important design decision, since users on Airbnb generally undergo a multi-stage search journey. Data shows that before making a final booking, users tend to perform multiple searches and take various actions — such as clicking into a home’s details, reading reviews, or adding a home to a wishlist. As such, it was crucial to develop a strategy that captures this entire multi-stage journey and accounts for the diverse types of listings a user might explore.

Diving deeper, we first grouped all historical queries of users who made bookings, using key query parameters such as location, number of guests, and length of stay — our definition of a “trip.” For each trip, we analyzed all searches performed by the user, with the final booked listing as the positive label. To construct (positive, negative) pairs, we paired this booked listing with other homes the user had seen but not booked. Negative labels were selected from homes the user encountered in search results, along with those they had interacted with more intentfully — such as by wishlisting — but ultimately did not book. This choice of negative labels was key: Randomly sampling homes made the problem too easy and resulted in poor model performance.

Figure 2: Example of constructing (positive, negative) pairs for a given user journey. The booked home is always treated as a positive. Negatives are selected from homes that appeared in the search result (and were potentially interacted with) but that the user did not end up booking.

Figure 3: Example of overall data pipeline used to construct training data for the EBR model.

Model Architecture

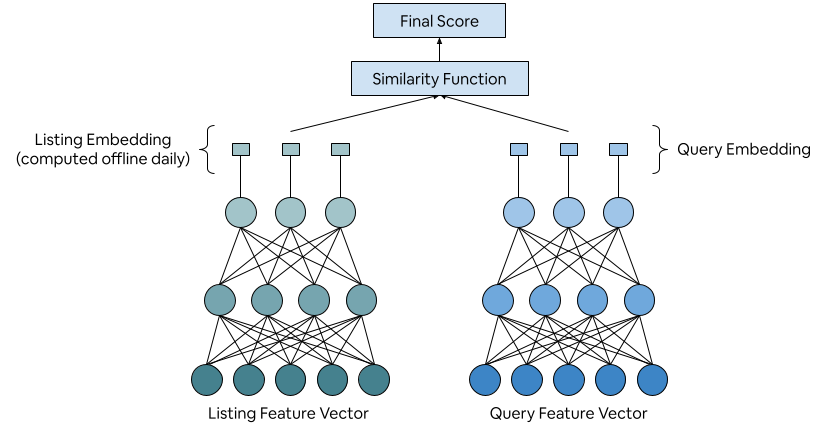

The model architecture followed a traditional two-tower network design. One tower (the listing tower) processes features about the home listing itself — such as historical engagement, amenities, and guest capacity. The other tower (the query tower) processes features related to the search query — such as the geographic search location, number of guests, and length of stay. Together, these towers generate the embeddings for home listings and search queries, respectively.

A key design decision here was choosing features such that the listing tower could be computed offline on a daily basis. This enabled us to pre-compute the home embeddings in a daily batch job, significantly reducing online latency, since only the query tower had to be evaluated in real-time for incoming search requests.

Figure 4: Two-tower architecture as used in the EBR model. Note that the listing tower is computed offline daily for all homes.

Online Serving

The final step in building our EBR system was choosing the infrastructure for online serving. We explored a number of approximate nearest neighbor (ANN) solutions and narrowed them down to two main candidates: inverted file index (IVF) and hierarchical navigable small worlds (HNSW). While HNSW performed slightly better in terms of evaluation metrics — using recall as our main evaluation metric — we ultimately found that IVF offered the best trade-off between speed and performance.

The core reason for this is the high volume of real-time updates per second for Airbnb home listings, as pricing and availability data is frequently updated. This caused the memory footprint of the HNSW index to grow too large. In addition, most Airbnb searches include filters, especially geographic filters. We found that parallel retrieval with HNSW alongside filters resulted in poor latency performance.

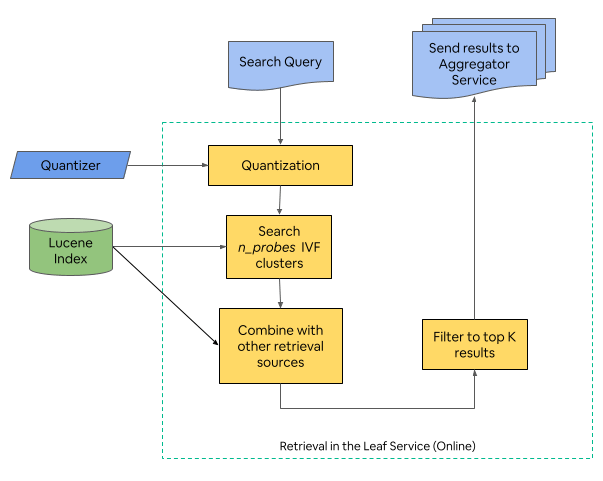

In contrast, the IVF solution, where listings are clustered beforehand, only required storing cluster centroids and cluster assignments within our search index. At serving time, we simply retrieve listings from the top clusters by treating the cluster assignments as a standard search filter, making integration with our existing search system quite straightforward.

Figure 5: Overall serving flow using IVF. Homes are clustered beforehand and, during online serving, homes are retrieved from the closest clusters to the query embedding.

In this approach, our choice of similarity function in the EBR model itself ended up having interesting implications. We explored both dot product and Euclidean distance; while both performed similarly from a model perspective, using Euclidean distance produced much more balanced clusters on average. This was a key insight, as the quality of IVF retrieval is highly sensitive to cluster size uniformity: If one cluster had too many homes, it would greatly reduce the discriminative power of our retrieval system.

We hypothesize that this imbalance arises with dot product similarity because it inherently only considers the direction of feature vectors while ignoring their magnitudes — whereas many of our underlying features are based on historical counts, making magnitude an important factor.

Figure 6: Example of the distribution of cluster sizes when using dot product vs. Euclidean distance as a similarity measure. We found that Euclidean distance produced much more balanced cluster sizes.

Results

The EBR system described in this post was fully launched in both Search and Email Marketing production and led to a statistically-significant gain in overall bookings when A/B tested. Notably, the bookings lift from this new retrieval system was on par with some of the largest machine learning improvements to our search ranking in the past two years.

The key improvement over the baseline was that our EBR system effectively incorporated query context, allowing homes to be ranked more accurately during retrieval. This ultimately helped us display more relevant results to users, especially for queries with a high number of eligible results.

Acknowledgments

We would like to especially thank the entire Search and Knowledge Infrastructure & ML Infrastructure org (led by Yi Li) and Marketing Technology org (led by Michael Kinoti) for their great collaborations throughout this project!